NVIDIA Snaps Up Groq in a $20 Billion Move That Reshapes AI Hardware

NVIDIA has completed its largest transaction to date, agreeing to pay $20 billion in an all-cash deal for Groq, a hot startup that has been making headlines with its custom-built chips that can run AI models in real time at speeds faster than anticipated.

Groq was formed in 2016 by Jonathan Ross as well as a group of ex-Googlers, and Ross played a crucial role in developing Google’s initial tensor processing unit, a chip designed to handle AI tasks far more efficiently than normal graphics cards. That experience certainly shaped Groq’s orientation from the start; rather than designing general-purpose hardware, the team focused on one essential step of AI work: inference. Sure, training an AI model is a huge thing and requires a lot of parallel computing power, but inference is where these models come into play, and that means using them to generate responses, make predictions, or spew out conclusions quickly.

Sale

ASUS ROG Xbox Ally – 7” 1080p 120Hz Touchscreen Gaming Handheld, 3-month Xbox Game Pass Premium…

- XBOX EXPERIENCE BROUGHT TO LIFE BY ROG The Xbox gaming legacy meets ROG’s decades of premium hardware design in the ROG Xbox Ally. Boot straight into…

- XBOX GAME BAR INTEGRATION Launch Game Bar with a tap of the Xbox button or play your favorite titles natively from platforms like Xbox Game Pass,…

- ALL YOUR GAMES, ALL YOUR PROGRESS Powered by Windows 11, the ROG Xbox Ally gives you access to your full library of PC games from Xbox and other game…

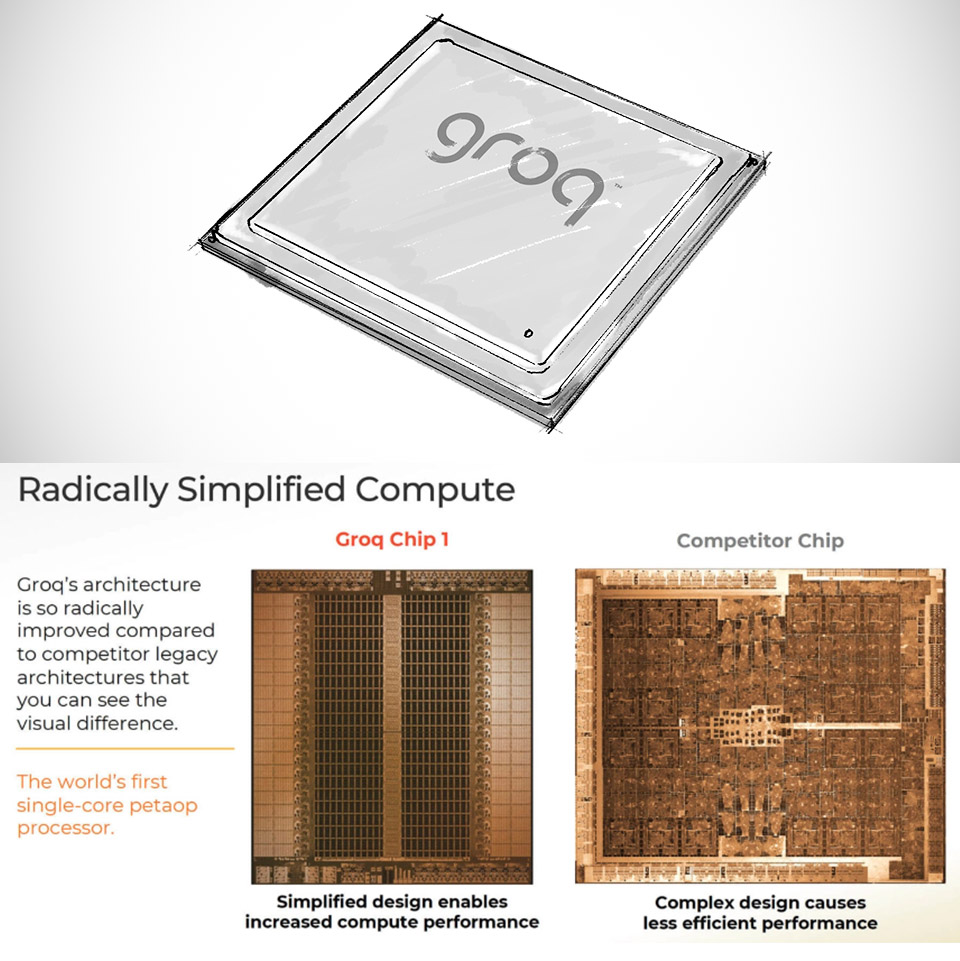

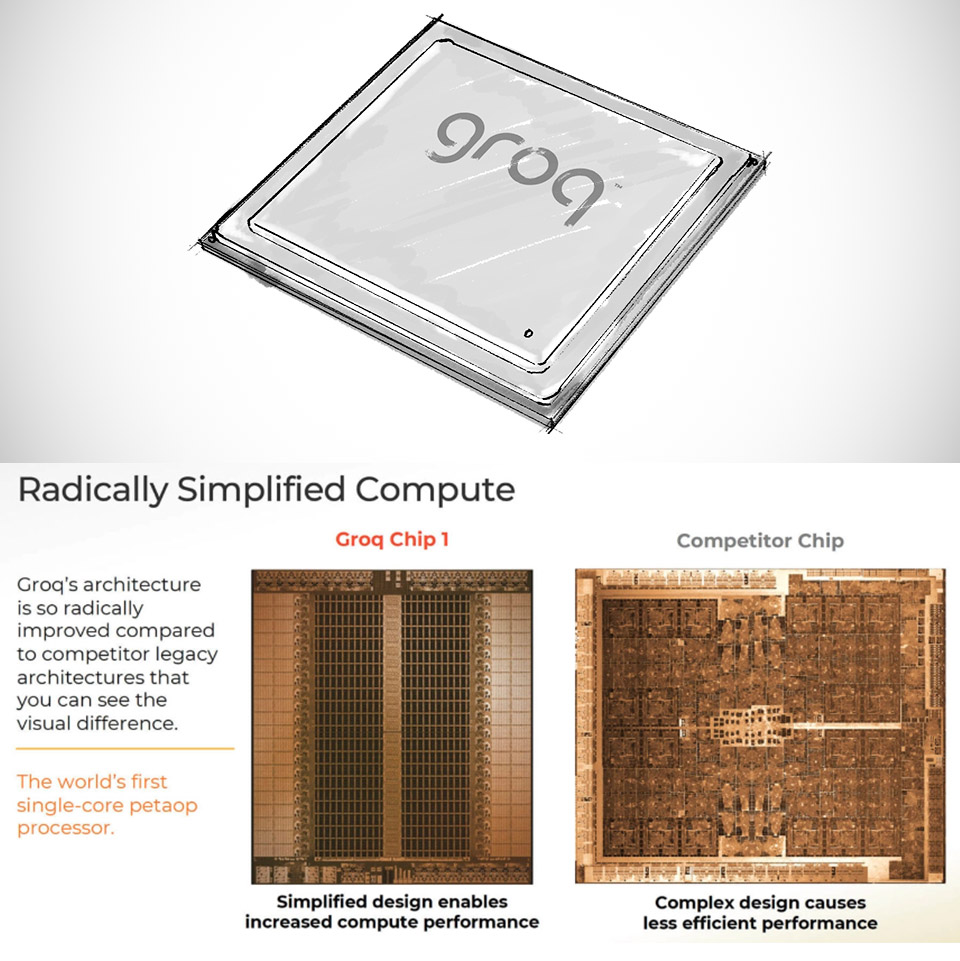

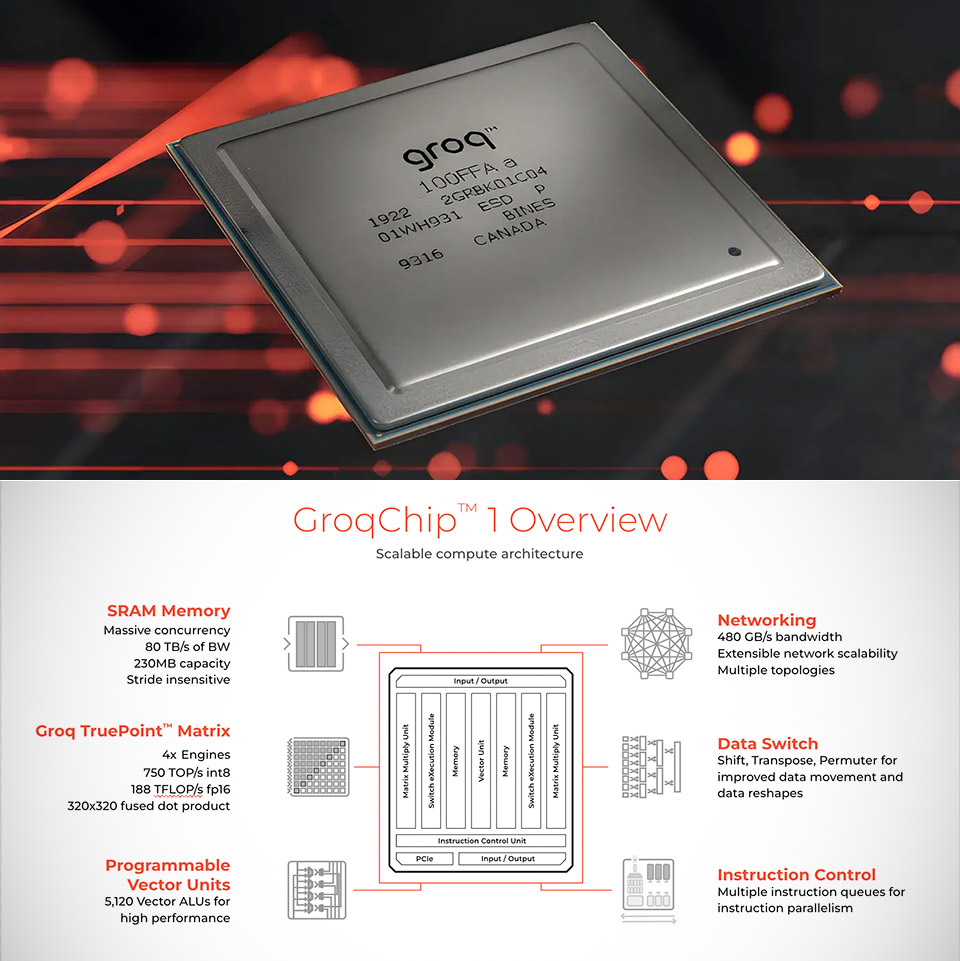

Groq’s processors, known as language processing units (LPUs for short), use an entirely different approach from NVIDIA’s graphics processing units. The latter are all about the heavy lifting of training, handling thousands of tasks simultaneously and as efficiently as feasible. However, LPUs are primarily concerned with simplifying the sequential stages required for inference. Data passes through them in a smooth, steady stream, similar to an assembly line where each component of the process just gets on with its work without any delays or hold-ups. Because the memory is literally inside the chip, there’s no need to continually switch between different components.

When you run the numbers, Groq’s hardware starts to look seriously impressive. They can generate hundreds of tokens per second for big language models, which is far quicker than the average graphics unit in real-time applications. You get responses nearly instantly, and with less total power use to boot. When it comes to scaling up data centers to handle millions of inquiries each day, energy efficiency becomes an important consideration. Groq claims they can run models up to ten times faster while consuming a quarter of the power, making them ideal for chatbots, search tools, and live AI services that require quick responses.

Photo credit: AIXX

NVIDIA already has a commanding lead in training hardware, supplying the vast majority of chips used to construct today’s cutting-edge models. However, inference is the next big thing, and it will result in a significant increase in demand for quick, reliable, and high-volume processing. That’s exactly what NVIDIA gets out of this transaction, bringing Groq’s technology in-house. Their CEO, Jensen Huang, has stated that they intend to integrate Groq’s super-low delay processors into their larger AI systems, offering customers more options for performing critical real-time jobs.

[Source]

NVIDIA Snaps Up Groq in a $20 Billion Move That Reshapes AI Hardware

#NVIDIA #Snaps #Groq #Billion #Move #Reshapes #Hardware